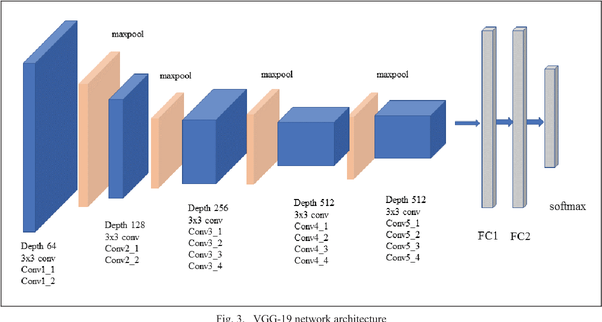

VGG19 is a variant of the VGG model which in short consists of 19 layers (16 convolution layers, 3 Fully connected layer, 5 MaxPool layers and 1 SoftMax layer). There are other variants of VGG like VGG11, VGG16 and others. VGG19 has 19.6 billion FLOPs.

Background

AlexNet came out in 2012 and it improved on the traditional Convolutional neural networks, So we can understand VGG as a successor of the AlexNet but it was created by a different group named as Visual Geometry Group at Oxford‘s and hence the name VGG, It carries and uses some ideas from it’s predecessors and improves on them and uses deep Convolutional neural layers to improve accuracy.

Let’s explore what VGG19 is and compare it with some of the other versions of the VGG architecture and also see some useful and practical applications of the VGG architecture.

Before diving in and looking at what VGG19 Architecture is let’s take a look at ImageNet and a basic knowledge of CNN.

First of all let’s explore what ImageNet is. It is an Image database consisting of 14,197,122 images organized according to the WordNet hierarchy. this is an initiative to help researchers, students and others in the field of image and vision research.

ImageNet also hosts contests from which one was ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) which challenged researchers around the world to come up with solutions that yield the lowest top-1 and top-5 error rates (top-5 error rate would be the percent of images where the correct label is not one of the model’s five most likely labels). The competition gives out a 1,000 class training set of 1.2 million images, a validation set of 50 thousand images and a test set of 150 thousand images.

Here comes the VGG Architecture, in 2014 it out-shined other state of the art models and is still preferred for a lot of challenging problems.

VGG19

So in simple language, VGG is a deep CNN used to classify images. The layers in the VGG19 model are as follows:

Architecture

- Fixed size of (224 * 224) RGB image was given as input to this network which means that the matrix was of shape (224,224,3).

- The only preprocessing that was done is that they subtracted the mean RGB value from each pixel, computed over the whole training set.

- Used kernels of (3 * 3) size with a stride size of 1 pixel, this enabled them to cover the whole notion of the image.

- spatial padding was used to preserve the spatial resolution of the image.

- max pooling was performed over a 2 * 2 pixel windows with sride 2.

- this was followed by Rectified linear unit(ReLu) to introduce non-linearity to make the model classify better and to improve computational time as the previous models used tanh or sigmoid functions this proved much better than those.

- implemented three fully connected layers from which the first two were of size 4096 and after that, a layer with 1000 channels for 1000-way ILSVRC classification and the final layer is a softmax function.

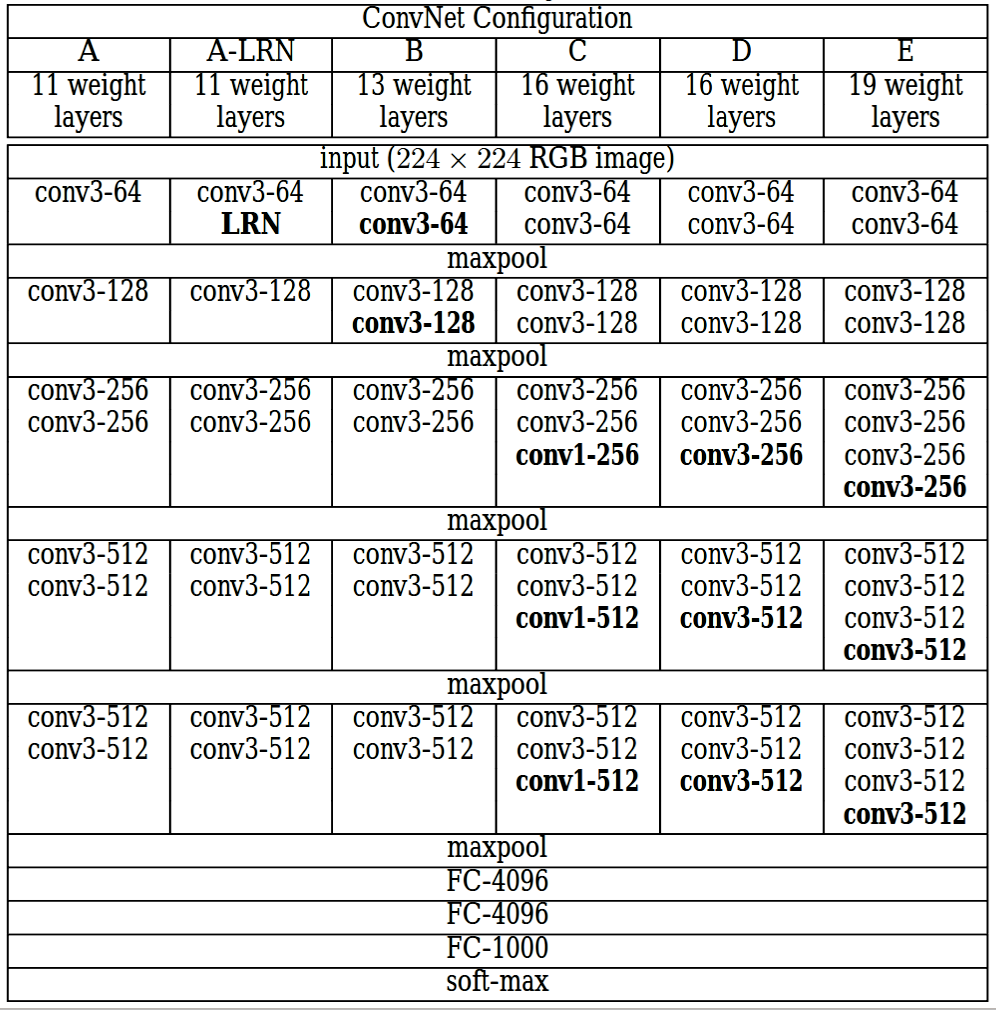

The column E in the following table is for VGG19 (other columns are for other variants of VGG models):

Results

Comparison between other state of the art models presented at ILSVRC.

Uses of the VGG Neural Network

The main purpose for which the VGG net was designed was to win the ILSVRC but it has been used in many other ways.

- Used just as a good classification architecture for many other datasets and as the authors made the models available to the public they can be used as is or with modification for other similar tasks also.

- Transfer learning: can be used for facial recognition tasks also.

- weights are easily available with other frameworks like keras so they can be tinkered with and used for as one wants.

- Content and style loss using VGG-19 network

What is the difference between VGG16 and VGG19 neural network?

VGG-16

VGG16 is a convolutional neural network model proposed by K. Simonyan and A. Zisserman from the University of Oxford in the paper “Very Deep Convolutional Networks for Large-Scale Image Recognition”. The model achieves 92.7% top-5 test accuracy in ImageNet, which is a dataset of over 14 million images belonging to 1000 classes. It was one of the famous models submitted to ILSVRC-2014. It makes the improvement over AlexNet by replacing large kernel-sized filters (11 and 5 in the first and second convolutional layer, respectively) with multiple 3×3 kernel-sized filters one after another. VGG16 was trained for weeks and was using NVIDIA Titan Black GPUs.

The input to the cov1 layer is of a fixed size 224 x 224 RGB image. The image is passed through a stack of convolutional (conv.) layers, where the filters were used with a very small receptive field: 3×3 (which is the smallest size to capture the notion of left/right, up/down, center). In one of the configurations, it also utilizes 1×1 convolution filters, which can be seen as a linear transformation of the input channels (followed by non-linearity). The convolution stride is fixed to 1 pixel; the spatial padding of Conv. layer input is such that the spatial resolution is preserved after convolution, i.e. the padding is 1-pixel for 3×3 Conv. layers. Spatial pooling is carried out by five max-pooling layers, which follow some of the Conv. layers (not all the conv. layers are followed by max-pooling). Max-pooling is performed over a 2×2 pixel window, with stride 2.

Three Fully-Connected (FC) layers follow a stack of convolutional layers (which has a different depth in different architectures): the first two have 4096 channels each, the third performs 1000-way ILSVRC classification and thus contains 1000 channels (one for each class). The final layer is the soft-max layer. The configuration of the fully connected layers is the same in all networks.

All hidden layers are equipped with the rectification (ReLU) non-linearity. It is also noted that none of the networks (except for one) contain Local Response Normalisation (LRN), such normalization does not improve the performance on the ILSVRC dataset, but leads to increased memory consumption and computation time.

VGG-19

VGG-19 is a convolutional neural network that is trained on more than a million images from the ImageNet database. The network is 19 layers deep and can classify images into 1000 object categories, such as a keyboard, mouse, pencil, and many animals. As a result, the network has learned rich feature representations for a wide range of images.

Resources

For the Implementational details and for deep study refer to the original paper.

- Very Deep Convolutional Networks for Large-Scale Image Recognition (ArXiv) by Karen Simonyan and Andrew Zisserman (University of Oxford)

- Convolutional Neural Network by Piyush Mishra and Junaid N Z (OpenGenus)

- VGG16 architecture by Abhipraya Kumar Dash (OpenGenus)

- Residual Network (ResNet) by Prashant Anand (OpenGenus)

- Floating point operations per second (FLOPS) of Machine Learning models by OpenGenus

from keras.models import Sequential

from keras.layers.core import Flatten, Dense, Dropout

from keras.layers.convolutional import Convolution2D, MaxPooling2D, ZeroPadding2D

from keras.optimizers import SGD

import cv2, numpy as np

def VGG_19(weights_path=None):

model = Sequential()

model.add(ZeroPadding2D((1,1),input_shape=(3,224,224)))

model.add(Convolution2D(64, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(64, 3, 3, activation='relu'))

model.add(MaxPooling2D((2,2), strides=(2,2)))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(128, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(128, 3, 3, activation='relu'))

model.add(MaxPooling2D((2,2), strides=(2,2)))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(256, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(256, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(256, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(256, 3, 3, activation='relu'))

model.add(MaxPooling2D((2,2), strides=(2,2)))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(512, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(512, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(512, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(512, 3, 3, activation='relu'))

model.add(MaxPooling2D((2,2), strides=(2,2)))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(512, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(512, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(512, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(512, 3, 3, activation='relu'))

model.add(MaxPooling2D((2,2), strides=(2,2)))

model.add(Flatten())

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(1000, activation='softmax'))

if weights_path:

model.load_weights(weights_path)

return model

if __name__ == "__main__":

im = cv2.resize(cv2.imread('cat.jpg'), (224, 224)).astype(np.float32)

im[:,:,0] -= 103.939

im[:,:,1] -= 116.779

im[:,:,2] -= 123.68

im = im.transpose((2,0,1))

im = np.expand_dims(im, axis=0)

# Test pretrained model

model = VGG_19('vgg19_weights.h5')

sgd = SGD(lr=0.1, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(optimizer=sgd, loss='categorical_crossentropy')

out = model.predict(im)

print np.argmax(out)